Ever had a machine learning model that performs exceptionally well on training data but falters when faced with new, unseen data? That’s the classic case of overfitting.

But don’t worry! In this post, we’ll explore how to leverage these methods to prevent overfitting and improve the model’s performance.

Let’s begin!

Overview

1 – Overfitting

Overfitting occurs when a model learns to capture noise or random fluctuations in the training data. This makes the model work well on seen data but not on unseen data.

To ensure that the model works well on unseen data, we need to minimize the generalization error. We can achieve this by either employing simpler models or by restricting the utilization of the entire potential of the deep learning models by using regularization.

2 – Regularization

Best performing models tend to be large but trained in a way that restricts the utilization of their entire potential, this strategy is called “Regularization”.

These techniques encourage models to have a preference for simpler models without making a part of the hypothesis space completely unavailable.

These techniques intend to reduce the risk of overfitting, without increasing the bias significantly. There are several ways to regularize a model. Commonly used strategies include:

- L1 and L2 Regularization

- Dropout

- Early Stopping

i. L1 Regularization

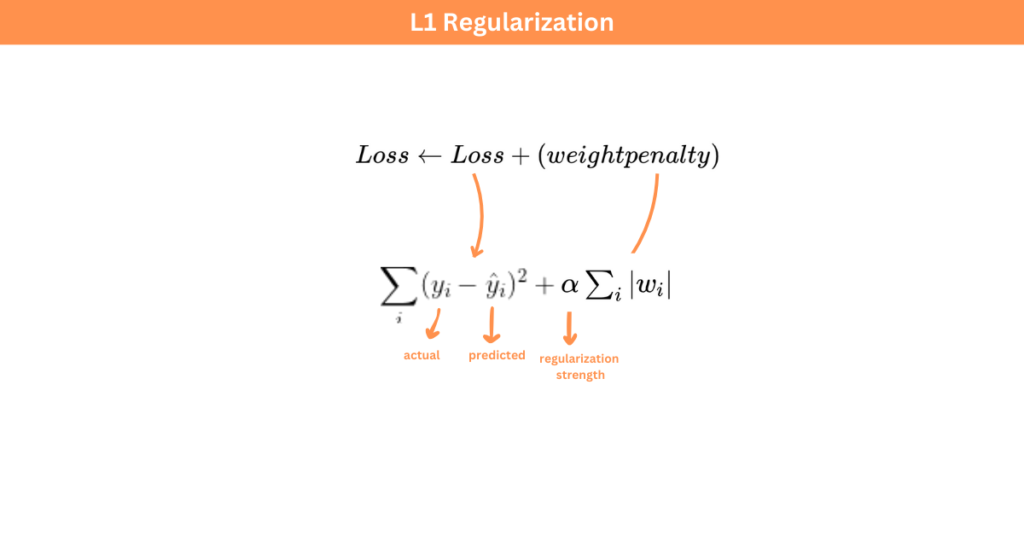

A common type of regularization that adds a weight decay term to the loss function. This weight decay puts a constraint on the model complexity by penalizing large weights.

In this type of regularization, the absolute sum of weights is added as a weight decay term to the loss function. This is called L1 regularization or “Lasso“.

A key property of L1 regularization is that it leads to sparser weights. In other words, it drives less important weights to zero. Therefore, it acts like a natural feature selector.

ii. L2 Regularization

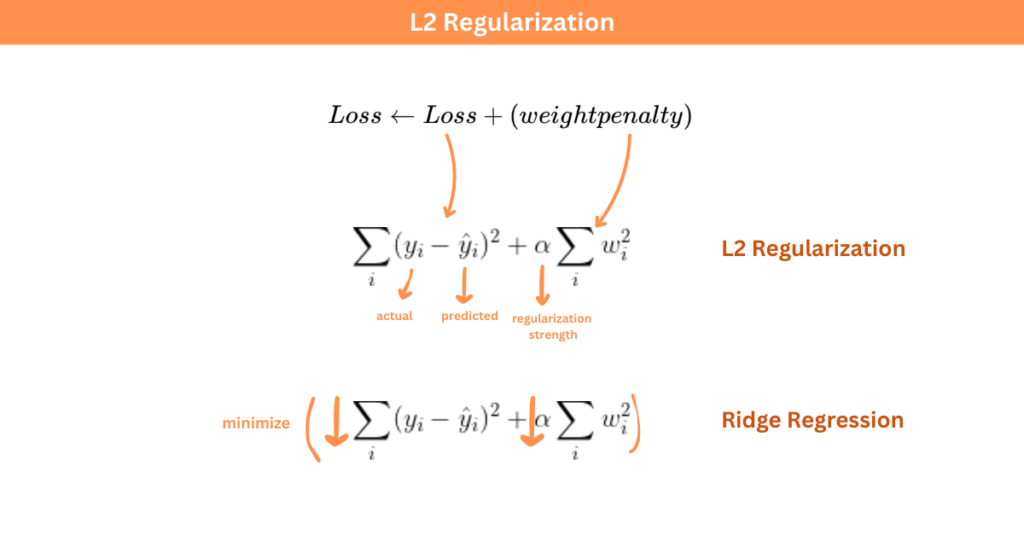

In this type of regularization, the sum of squares of weights is added as a weight decay term to the loss function.

For example, our loss function is Mean Squared Error (MSE) and we add the sum of squares of weights to the loss function as a weight decay term. Here \alpha determines the strength of regularization.

- \alpha = 0 disables the regularizer

- A large \alpha may lead to underfitting

When we minimize this regularized loss function, our optimization algorithm tries to decrease both the original loss function and the squared magnitude of the weights, expressing the preference towards smaller weights. Regression with L2 regularization is called “Ridge Regression”.

Another thing to note here is:

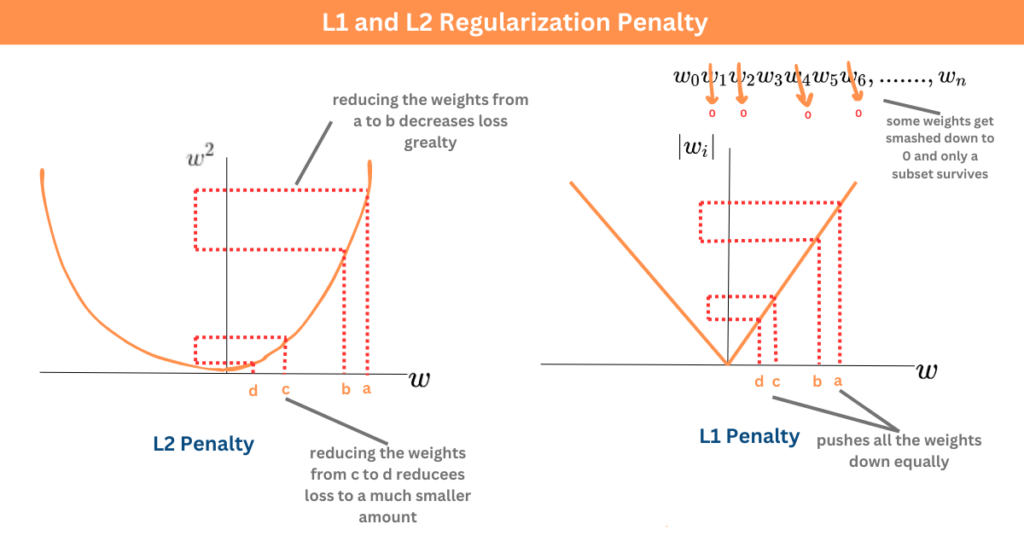

L2 Regularization penalizes smaller weights less than larger weights since it tries to minimize the squared magnitude of the weights. So, there isn’t a big incentive for the model to drive smaller weights to 0.

For example, reducing a weight from a to b decreases the loss greatly whereas reducing a smaller weight by the same amount decreases the loss to a smaller amount.

L1 regularization pushes all the weights down equally. As a result, some of the weights get smashed down to 0 and only a subset of the weights survive.

Although L1 regularization has nice sparsity property, it is rarely seen leading to a significantly better performance than L2 regularization.

iii. Early Stopping

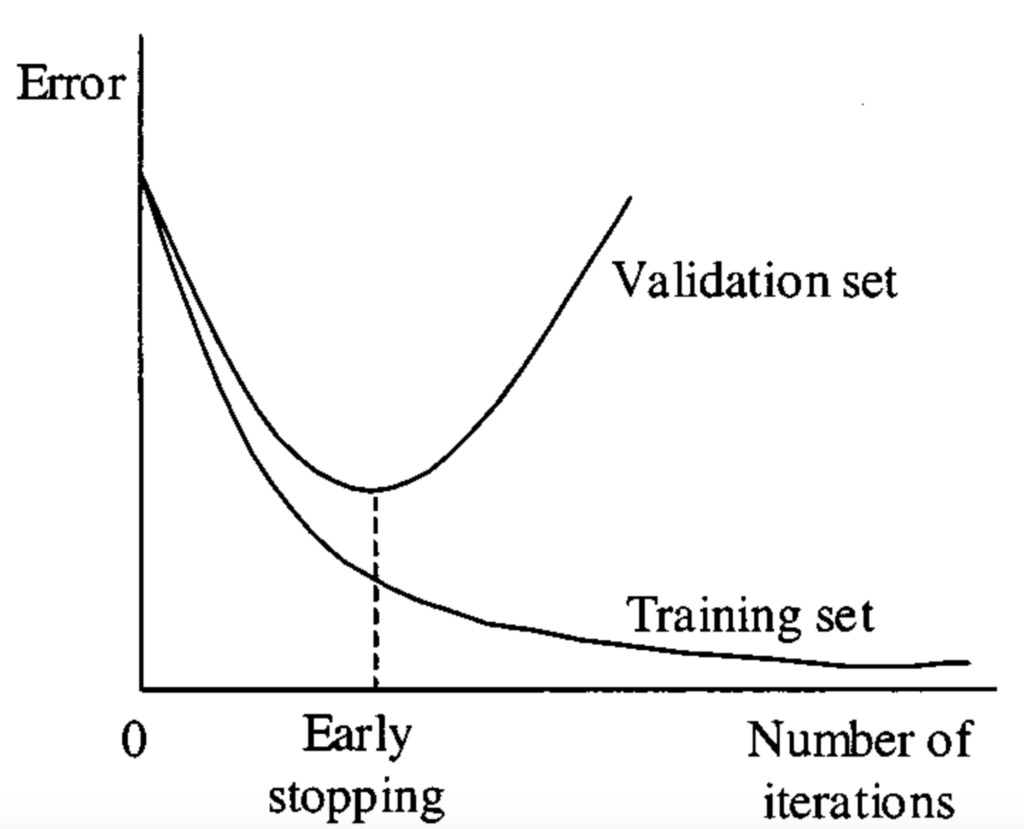

Training a model for a higher number of iterations increases its effective capacity. To use this information to avoid overfitting, we take snapshots of the model during training run these snapshots on the validation set, and pick the snapshot that has the best validation performance. Once the validation loss stops improving for a while, it’s practical and efficient to stop training. This is called “Early Stopping”.

How does early stopping work as a regularizer?

Early stopping has an effect similar to weight decay. We initialize our model with small weights. Every training iteration has the potential to update the weights towards the larger value. Therefore, the earlier we stop training, the smaller the weights are likely to be.

iv. Dropout

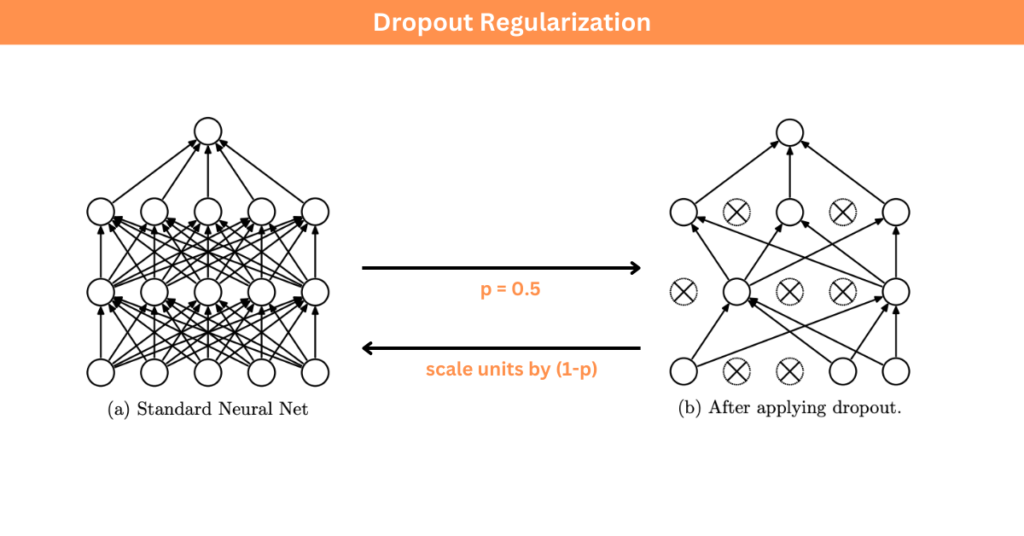

Dropout is a regularization technique for neural networks that randomly drops out neurons with some probability during training. The training algorithm uses a subset at every iteration.

This approach encourages neurons to learn useful features on their own without having to rely on other neurons. Once the model is trained, the entire network is used for inference. The outputs of the neurons are scaled to make sure that the overall magnitude of the neuron output does not change due to the changed number of active neurons during the training and test.

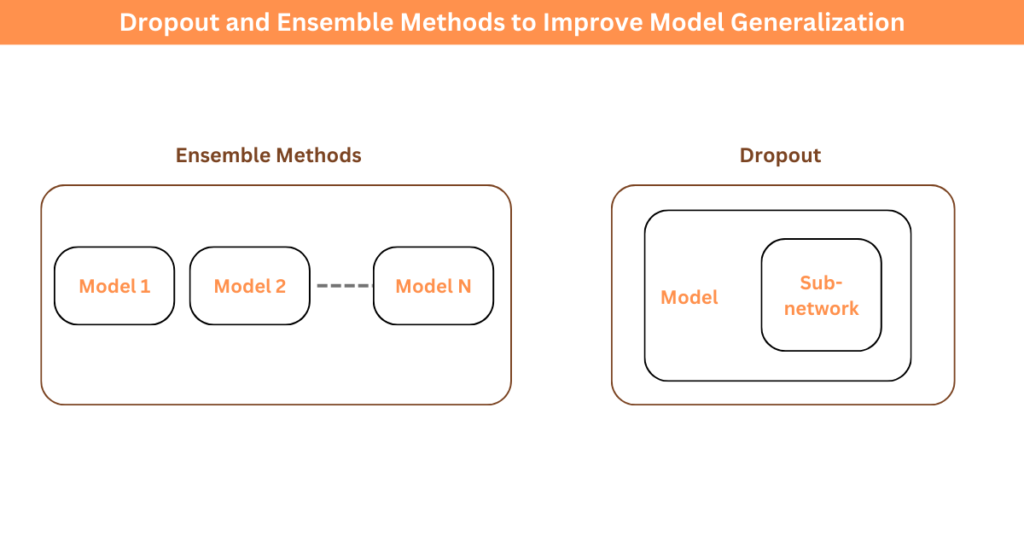

This approach is somewhat similar to ensemble methods in machine learning.

Ensemble methods train multiple models separately for the same task, then combine them to achieve better predictive performance.

The difference in Dropout is that the training algorithm doesn’t train disjoint models. instead, a random sub-network is selected at every step. These sub-networks share parameters as they all come from the same networks but with a different set of units masked.

In a way, dropout can be considered as a type of ensemble method that trains nearly as many models as the number of steps, where the models share parameters.

3 – How to Choose the Right Regularization Technique?

Well, choosing the right regularization technique depends on several factors, including:

- The characteristics of the dataset,

- The complexity of the model, and

- Specific goals of the task

4 – What Information Does Regularization Provide?

Regularization methods introduce additional prior knowledge in optimization processes such as:

- Smaller weights are better (as indicated by weight decay and early stopping)

- Parameter sharing is useful (useful assumptions when working with images or audio signals)

To prevent overfitting, we can:

- Limit the model capacity,

- Get more data

The techniques that we have covered so far focus on addressing the limited model capacity. We can also prevent overfitting by increasing the amount of data we have, by using data augmentation.

Summary

- Regularization techniques control model complexity and prevent overfitting by adding penalties or constraints during training.

- Common techniques include L1 and L2 regularization, dropout, and early stopping.

- The choice of these techniques depends on factors such as the dataset characteristics, model complexity, and specific task.

- Regularization provides additional prior knowledge in optimization processes, such as preferring smaller weights and promoting parameter sharing.

Further Reading

Ensemble Learning – Wikipedia

Related Articles

- Early Stopping – But When? – Lutz Prechelt

- Dropout: A Simple Way to Prevent Neural Networks from Overfitting – Srivastava et al.