Overfitting, underfitting, and a model’s capacity are critical concepts in deep learning, particularly in the context of training neural networks.

In this post, we’ll learn how a model’s capacity leads to overfitting and underfitting of the data and its effect on the performance of a neural network.

Let’s begin!

Overview

Table of Contents

In this post, you will learn:

- What is the model’s capacity?

- How model’s capacity affect the way the model fits the same set of data?

- Concept of Overfitting, underfitting, and finding just the right fit

- How to know if the model would work well on unseen data?

Model’s Capacity

A model’s capacity refers to its ability to capture and represent complex patterns in the data. It reflects the flexibility and complexity of the model architecture.

Let’s understand this with the help of an example:

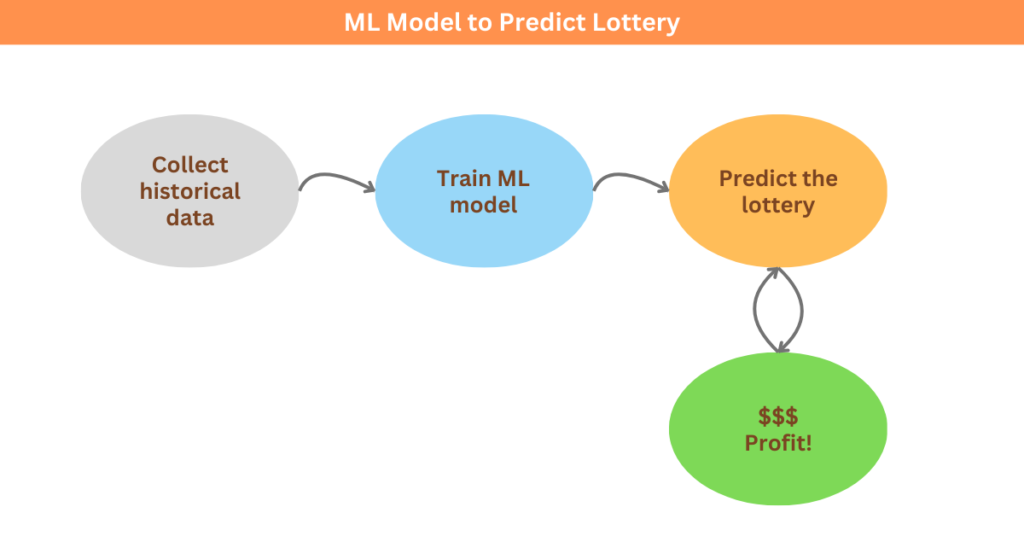

We can train a model using historical data and make predictions about the lottery based on that trained model as shown in the figure below:

The problem is that the model being able to fit on seen data, doesn’t mean that it will perform well on unseen data. This means that the model with high capacity (has a large number of parameters and is trained long enough) can memorize training samples.

In the lottery case, the input can be the date of the lottery and the output can be the lucky number of that day’s lottery. If we train a high-capacity model on the historical data, the model will learn a lookup table that learns to map the inputs to outputs without having any predictive power!

The model would give the correct output for the data it has seen before, but wouldn’t give a good output for unseen data, such as “future date”.

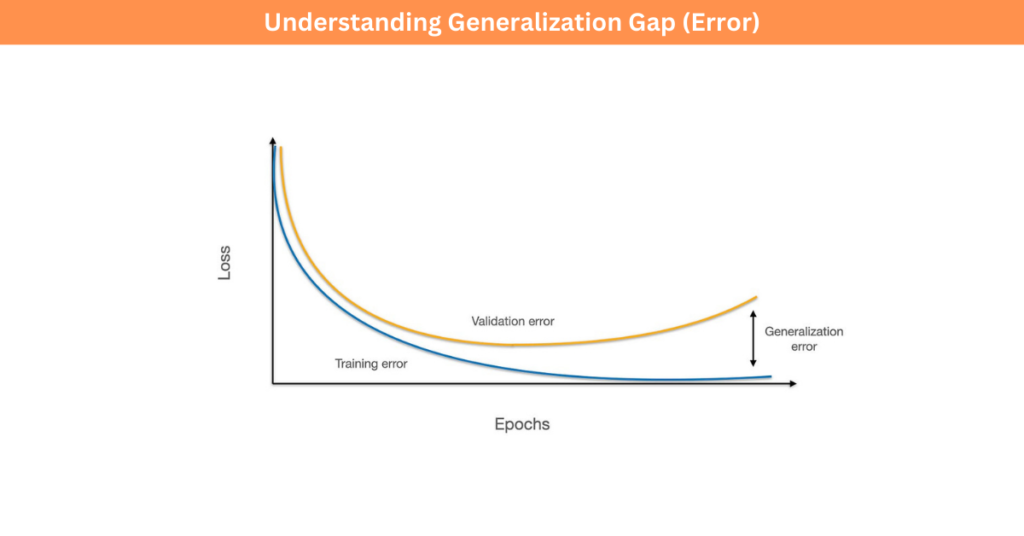

The disparity between the performance of seen and unseen data is called the generalization gap!

Generalization Gap

It’s common in machine learning to observe a gap between training and test performance. There is usually a link between the model’s capacity and error.

Increasing the model’s capacity helps in reducing training error but it also increases the risk of overfitting leading to a larger generalization gap.

How Model’s Capacity Affects the Way A Model Fits the Same Set of Data

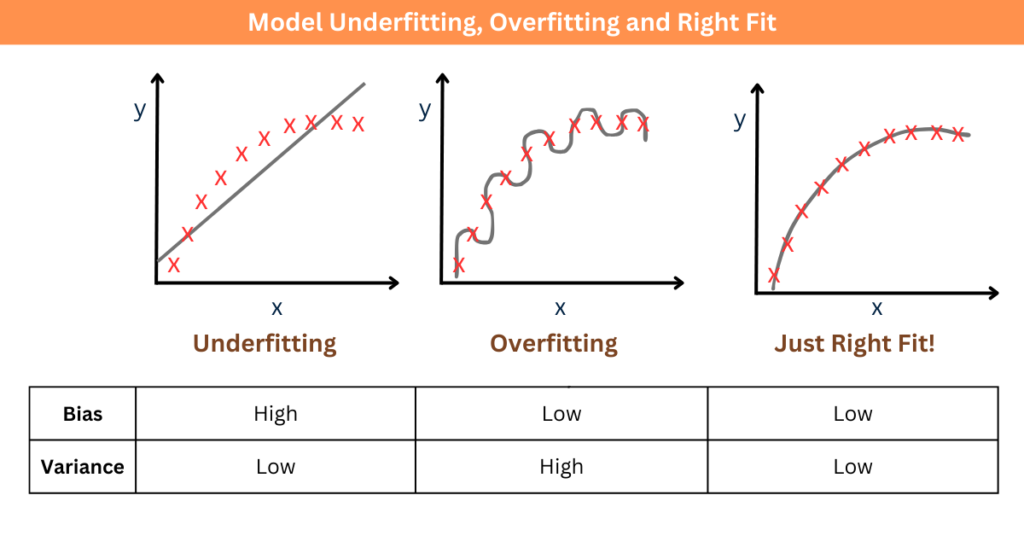

Let’s now take a look at how your model’s capacity affects the way the model fits the same set of data by looking at an example where we fit a linear function to a set of data points as shown in Figure below:

- Underfitting: The error on training samples is quite high, the model has low variance but high bias.

- Overfitting: To get a closer fit, we try to fit a polynomial of a higher order, this gives us low bias but high variance. This means that the data works well on seen data but not so well on unseen data.

- Best Fit: If we fit a curve through the data points, we can see now we have low bias and low variance, therefore fitting the data closely while generalizing seemingly well to unseen points.

Well, now you might wonder how the model will perform on unseen data, when infact the data is unseen!

How to Know If The Model Would Work Well on Unseen Data?

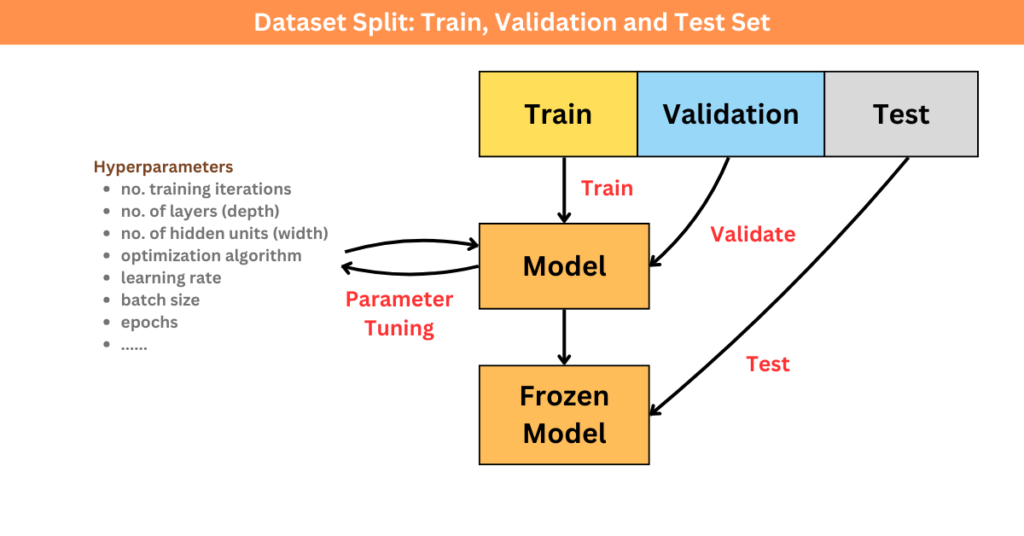

We can’t test a model on data points that are truly unseen, but we can hide a portion of our data and evaluate the model on that part.

Typically, we divide our data into three sets:

- Train Set: We use this to train the model.

- Validation Set: We use this to configure our model and figure out how the model generalizes on unseen data. This includes picking the hyperparameters (like the width and height of the network, how neurons connect, and how long to train the model).

- Test Set: We use this dataset to test the model’s performance and observe how it performs on truly unseen data. It gives an unbiased estimation of the generalization performance.

Here’s a post to learn more about ways to choose the train validation and testing sets:

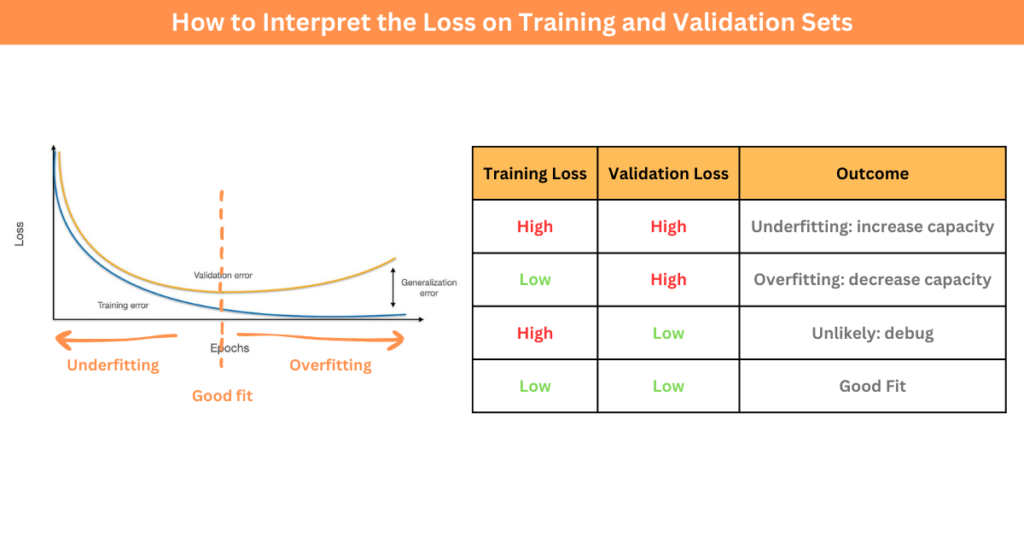

Before we wrap up, here’s a summary of how you can interpret the error or loss in general, on the training and validation sets

To deal with overfitting, we can try shrinking the size of our model or use regularization techniques.

Summary

- A model’s capacity refers to a model’s ability to capture complex patterns in data. High-capacity models can memorize training samples but may struggle to generalize to unseen data, leading to overfitting.

- The difference between a model’s performance on training data and unseen data is known as the generalization gap.

- Achieving the right balance between bias and variance is essential for optimal model performance.

- To assess the model’s performance on unseen data, datasets are typically divided into train, validation, and test sets.