Fix the Vanishing gradient problem – In deep neural networks, we encounter a common hurdle known as the vanishing gradient problem.

Imagine this: You’re training a deep neural network, hoping it will learn from your data and make smart predictions. But as the network goes deeper, something strange happens. The gradients, which guide the network’s learning process, start becoming really tiny or vanishingly small. It’s as if the network gets stuck and can’t move forward.

This is where the Rectified Linear Unit (ReLU) function comes to the rescue.

In this post, we’ll explore how we can use ReLU to make neural networks learn more efficiently

Overview

In this post, you will learn:

- How you can use ReLU to fix the vanishing gradient problem?

- What are dying ReLU neurons? How does it impact the neural network’s performance?

1 – How to Use ReLU to Fix the Vanishing Gradient Problem?

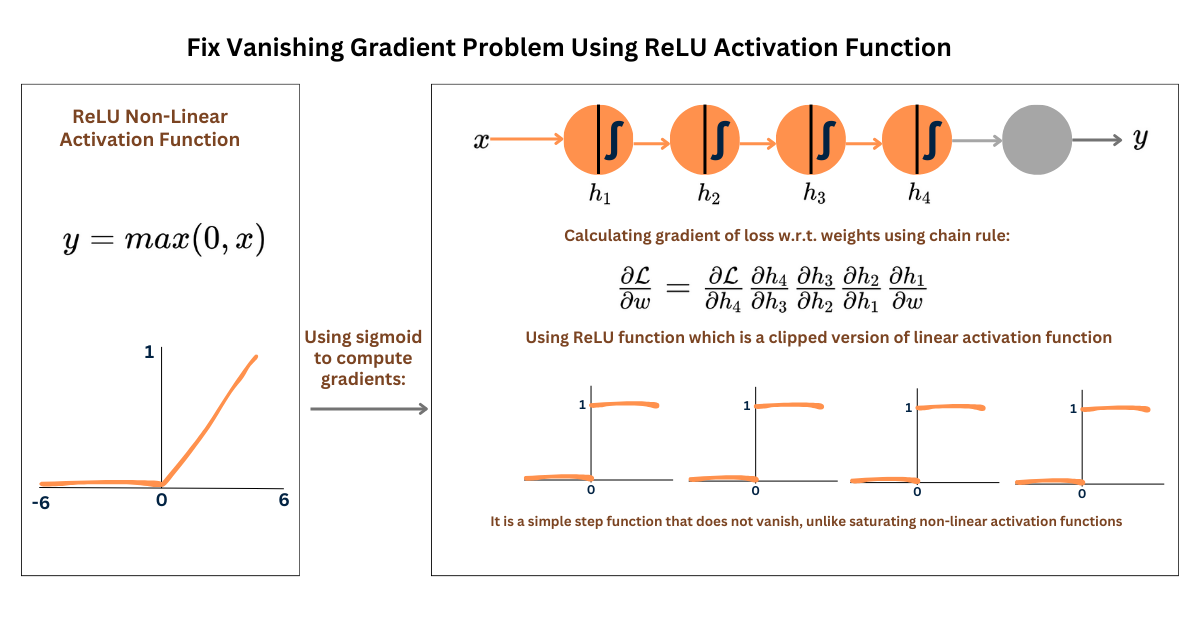

By using ReLU function, the output of the function is the same as the input if the input is positive and zero otherwise.

ReLU is a simple step function that does not vanish, unlike other saturating non-linear activation functions.

The figure below summarizes how ReLU can overcome the vanishing gradient problem:

Here’s how ReLU helps in fixing or at least alleviating the vanishing gradient problem:

- It does not suffer from saturation for positive input values, unlike activation functions like sigmoid and hyperbolic tangent functions. This means that ReLU does not approach a constant value for large positive inputs, allowing gradients to flow more freely.

- It introduces sparsity in neural network activations by zeroing out negative input values, leading to faster convergence.

- It provides smoother gradients for positive input values. This prevents gradients from becoming excessively small in earlier layers.

2 – Dying ReLU Neurons

Neurons with ReLU non-linear activation are very easy to optimize, however, they might sometimes die. The term ‘dying ReLU neurons’ refers to the phenomenon where certain neurons in a network may become inactive, meaning they consistently output zero for all inputs.

Here’s why this can happen:

- If neurons consistently receive negative weighted inputs during training, their output will be zero, making them inactive.

- During backpropagation, the weights of the neural network are updated based on gradients computed w.r.t. loss function. If neurons output zero, its gradient w..r.t. the loss function will also be zero.

- Over time if neurons remain inactive for a large portion of training data, it may fail to contribute meaningfully to the learning process. This can lead to the neuron becoming “stuck” making it useless for the task at hand.

- While sparsity introduced by ReLU can be beneficial for reducing computational complexity and improving efficiency, excessive neuron deaths can lead to overly sparse networks. This affects the neural network’s ability to learn complex patterns in the data.

3 – How to Fix Dying ReLU Neurons?

Here are some techniques that you can use to resolve the issue of dying neurons:

i. Leaky ReLU

This function allows a small, non-zero gradient for negative inputs. This prevents neurons from becoming inactive and promotes better gradient flow during training.

ii. Parametric ReLU

This function introduces a learnable parameter that controls the slope of the negative part of the activation function. It provides more flexibility and adaptability by allowing the slope to be learned during training. This reduces the likelihood of dying neurons prematurely.

iii. Randomized ReLU (RReLU)

This function sets the negative part of the activation function to a small, random slope during training. This stochastic element helps prevent neurons from becoming completely inactive and encourages the exploration of different activation patterns, mitigating the risk of dying neurons.

iv. Initialization Techniques

Proper initialization of weights can also help to prevent or mitigate the issue of dying neurons. Techniques such as “He initialization” which initializes weights based on the size of the network, can ensure that neurons receive non-zero inputs during the initial stages of training, reducing the likelihood of them becoming inactive.

Summary

- ReLU is a non-linear activation function that overcomes the vanishing gradient problem by avoiding saturation for positive inputs, introducing sparsity, and providing smoother gradients for positive inputs.

- Despite its advantages, ReLU neurons may die, where certain neurons output zero consistently during training.

- Techniques like Leaky ReLU, parametric ReLU, randomized ReLU, and proper weight initialization can help address the issue of dying neurons, ensuring better gradient flow and more effective learning.

Recommended Reading

- How to Detect Vanishing Gradients in Neural Networks

- How to Detect Exploding Gradients in Neural Networks

Related Videos

- Deep Learning Crash Course – Leo Isikdogan