Choosing the train, validation, and test sets is a critical step in developing a machine learning model. This split helps you to measure your model’s performance by making sure that the datasets used for model training and evaluation are distinct.

In this post, we will understand dataset splitting, uncover its importance, ways to partition the dataset and ensure the dataset is split properly.

Let’s begin!

Overview

1 – What are Train, Validation, and Test Sets?

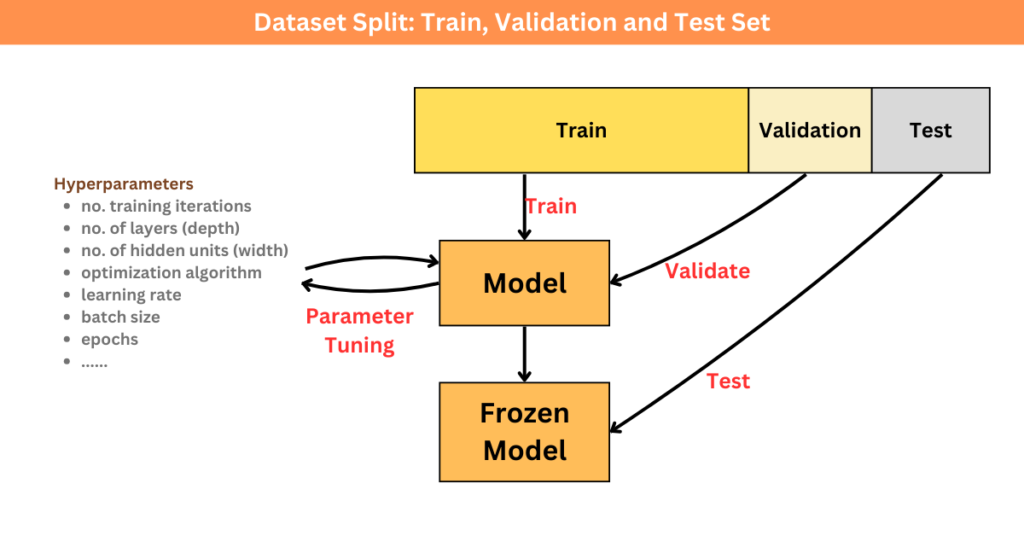

In the context of machine learning and data science, the terms “train”, “validation”, and “test” sets refer to subsets of the dataset used for different stages of model development and evaluation:

- Train Dataset: This is the subset of the dataset used to train the machine learning model.

- Validation Dataset: This set is used to fine-tune the model’s hyperparameters and assess the model’s performance during training.

- Test Dataset: This is a completely independent subset of the dataset that is used to assess the final performance and generalization ability of the model.

2 – Why Split the Dataset into Train, Validation, and Test Sets?

As defined earlier, we train our model on the training set, evaluate it on the validation set, and test it using the test set as shown in the figure below:

Splitting the dataset into multiple subsets serves several purposes, including:

i. Model Training

The primary purpose of splitting the dataset is to train the machine learning model. Using a portion of the data for training, the model learns to recognize patterns and relationships within the datasets. This allows the model to make predictions on new, unseen data.

ii. Model Evaluation:

By reserving a portion of data for evaluation, you can assess how well the model generalizes on unseen data. This evaluation helps the model to determine whether the model has learned meaningful patterns or if it is memorizing the data (overfitting).

iii. Hyperparameter Tuning:

Training a machine learning is an optimization problem where the task is to optimize the parameters (i.e. weights and biases) so that the model can learn useful features from data. During training, you need to adjust the hyperparameters such as learning rate, regularization strength, or network architecture. The validation set provides an independent dataset for evaluating the model’s performance with different hyperparameter settings.

iv. Preventing Data Leakage:

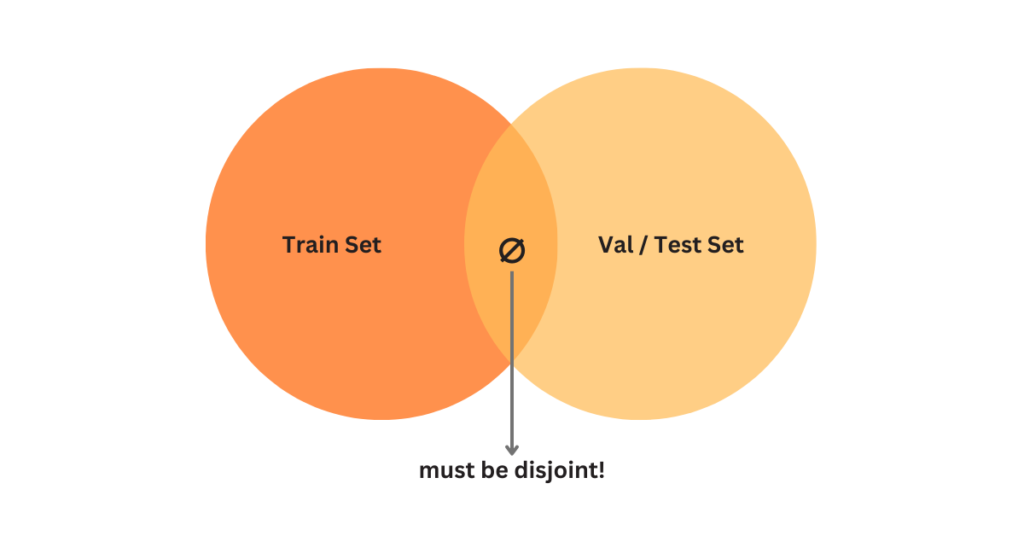

Splitting the dataset into training, validation, and testing sets helps prevent data leakage, here’s how:

- Training set: The model learns from the training set exclusively. Its parameters are adjusted iteratively using an optimization algorithm to minimize errors between predictions and actual labels in the training data. The model’s performance on this data is expected to improve over time.

- Validation set: This subset does not directly learn from the validation set. Instead, it evaluates its performance on this independent dataset to make decisions about hyperparameters or model architecture adjustments.

- Testing set: This subset provides an unbiased evaluation of the final model’s performance. This dataset is never used in training and serves as a completely independent dataset to assess how well the trained model generalizes on unseen data.

What can cause data leakage?

If the dataset is used during training or hyperparameter tuning, it could introduce data leakage. Due to this, the model might inadvertently learn from this data, leading to an overestimation of its performance on unseen data.

3 – How do We Partition Dataset into Train, Validation, and Test Sets?

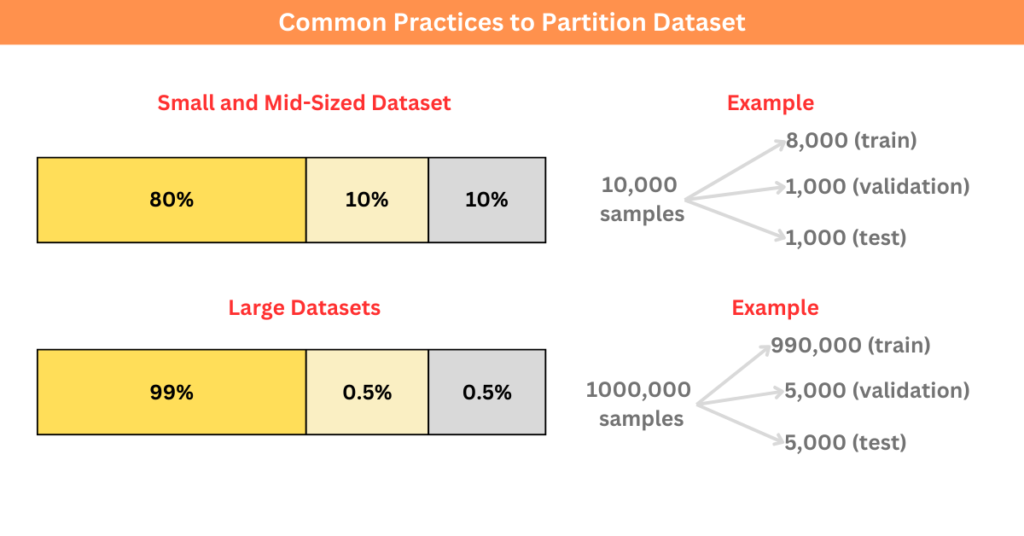

Well, this depends on the amount of data you have. Here are some common practices to obtain train, validation, and test sets based on the size and characteristics of your dataset:

i. Small and Mid-Sized Datasets

It is a common practice to use more or less 80% of the data for training and the rest for validation and testing for small and midsized datasets.

For example, if we have a dataset with 10,000 samples, we can reserve 1,000 samples for testing 1,000 for validation, and the rest 8,000 for training the network.

ii. Large Datasets

It might be a waste to use 20% of data for test and validation when working with large datasets. We can keep 99% data for training the rest 0.5% for validation and 0.5% for testing purposes.

For example, if our dataset has 1 million samples. we can keep 990,000 samples for training, and remaining 5,000 for validation, and 5,000 for testing.

Some Datasets Might Have Duplicate Samples!

Duplicates can introduce biases into training, validation, and test sets. Before splitting the dataset, make sure that duplicate samples are not present in more than one subset.

For example, if a sample is present in the Training set, make sure that it is not present in the validation or test sets.

4 – How to Ensure That The Dataset Is Split Properly?

i. Size of Each Subset:

- The training set should typically be the largest portion of the dataset, as the model learns from this data.

- The validation set is usually smaller than the training set.

- The test set is often the smallest subset.

ii. Randomness of split:

Randomness helps prevent bias in the subsets. To achieve this, you should randomly shuffle before splitting the dataset. This ensures that each subset represents a diverse range of examples.

iii. Stratified Splitting:

In classification tasks, especially when dealing with imbalanced distributions, you should perform stratified splitting. Stratified splitting ensures that each class is represented proportionally in each subset, preventing one class from being overrepresented or underrepresented in any of the sets.

Summary

- Splitting the dataset into distinct subsets facilitates unbiased evaluation of model performance and helps prevent overfitting.

- The partitioning of the dataset into train, validation, and test sets depends on factors such as dataset size, expected model complexity, and data distribution.

- Attention should be paid to potential data leakage, such as duplicate samples present in multiple subsets, to ensure the integrity of model evaluation.

- Proper dataset splitting techniques involve considering the size of each subset, ensuring randomness in the split, and employing stratified splitting, especially in classification tasks with imbalanced class distributions.