Vanishing Gradients – In this post, we are tackling the common problem that can pop up when training artificial neural networks: the unstable gradient problem, popularly referred to as the “Vanishing Gradient” problem.

But before we get into that, let’s clarify what we mean by gradients:

When we use gradients in an ANN, we mean the gradient of the loss functions w.r.t. its weights in the network.

So, how do we calculate these gradients?

By using backpropagation!

We know after training a neural network, that we use these computed gradients to update the weights. This helps the network in learning while it is being trained.

These weights are updated using optimization algorithms like stochastic gradient descent. With the goal in mind to find the optimal weight in each connection that would minimize the total loss in the network.

With this understanding, we will now talk about vanishing gradients, let’s begin.

Overview

In this post, you will learn:

- What is the Vanishing Gradient Problem?

- Which neural network setup is prone to experience the vanishing gradient problem?

- How does the vanishing gradient problem occur?

- Understanding vanishing gradient with the help of an example.

- Signs that your neural network suffers from a vanishing gradient problem.

1 – What is the Vanishing Gradient Problem?

Vanishing gradient problem causes major difficulty when training a neural network, especially affecting the weights in earlier layers.

During training, stochastic gradient descent (SGD) computes the gradient of the loss concerning the weights in the network. However, in some cases, this gradient becomes exceedingly small – hence the term “vanishing gradient.”

Why is a small gradient a big deal?

Well, when SGD updates a weight based on its gradient, the update is proportional to that gradient. If the gradient is vanishingly small, then the update will be vanishingly small too.

This means that the network is making only tiny adjustments to the weights. Due to this, the network learns very slowly.

Also, if the adjustments are too small, the network can get stuck in a rut and stop improving altogether.

2 – Neural Network Configuration Susceptible to Vanishing Gradients

As we discussed earlier, neural networks are trained by gradient descent. The adjustment is guided by the gradient of the loss function. This tells us how much each parameter should change to reduce the error.

Gradient descent requires that the function used in the network are differentiable. This means we can find the slope (gradient) of the function.

In neural networks, activation functions introduce non-linearity. Sigmoid and hyperbolic tangent function (Tanh) are both differential, making them suitable choices for activation on early neural networks.

Effect of Sigmoid and Tanh Non-Linear Activation:

Both Sigmoid and Tanh functions squash the input to a specific range: 0 to 1 for Sigmoid and 0 to -1 for Tanh. This is useful for binary classification, where we want outputs to represent probabilities.

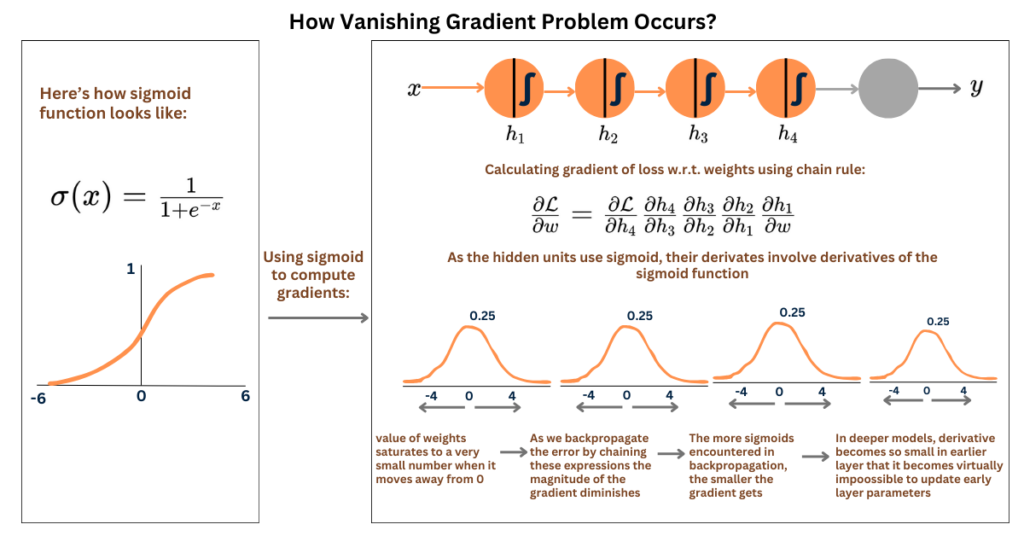

Let’s now see what happens when we use Sigmoid functions in all layers of the neural network. For simplicity, let’s consider a network with one input layer, four hidden layers, and one output layer, each with one node. The figure below summarizes how the sigmoid activation function leads to the vanishing gradient problem.

When we use the sigmoid activation function in each hidden layer within the neural network, the gradient of the layers closer to the input tends to become smaller and smaller as they propagate backward through the network.

In deep neural networks with many layers, when the gradient of the parameters in earlier layers becomes very small, it becomes difficult for these parameters to be updated during training. This is because small gradients mean small updates to the parameters, and by small, we mean less than 1.

Over many layers, these updates become so small that they effectively vanish. As a result, the network has difficulty learning meaningful representations in the earlier layers, which can hinder its overall performance.

Another function used in neural networks is the Hyperbolic Tangent Function (Tanh) can be employed, which is the scaled and shifted version of sigmoid. However, similar to the sigmoid function, Tanh also suffers from vanishing gradient problem, especially in deep learning. This is because tanh also saturates when its output becomes very small.

3 – Understanding Vanishing Gradients Through Example

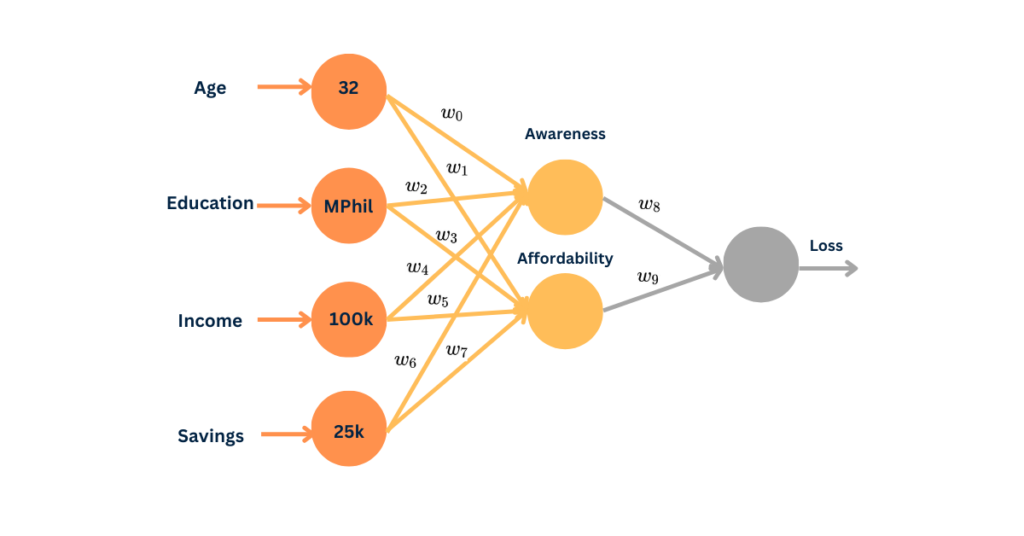

Now we know the vanishing gradient problem with a simplified neural network. Let’s go through an example of ANN that tries to predict if a person will buy insurance or not. This decision is based on factors such as age, education, income, and savings:

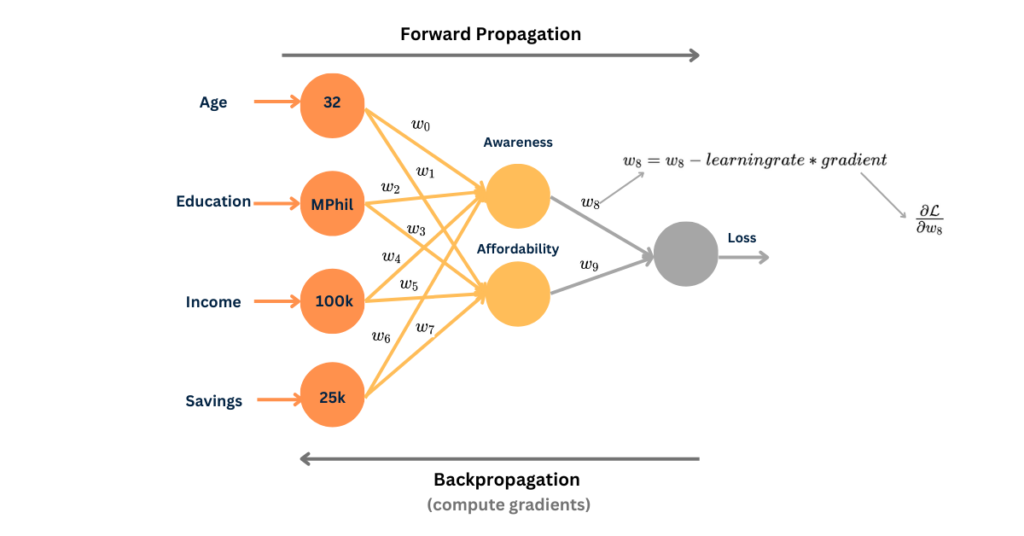

First, we perform forward propagation to calculate the loss, next, we calculate the gradient to update the weights, like this:

Let’s take w_8 as an example, to calculate the new weight value, we use this equation:

w_8 = w_8 - learning rate * gradientthe gradient is calculated using:

\frac{\partial \mathcal{L}}{\partial w_8}This tells how much loss is changing for changing weights.

We also know that using the sigmoid function leads to a small value for weights, ultimately leading to smaller numbers. For example, if we try to calculate the gradient of loss w.r.t. weight w_1

\frac{\partial \mathcal{L}}{\partial w_1} = \frac{\partial \mathcal{L}}{\partial Awareness} \frac{\partial \mathcal{Awareness}}{\partial w_1}gradient = d1 * d2,

where d1 = \frac{\partial \mathcal{L}}{\partial Awareness} and d2 is \frac{\partial \mathcal{Awareness}}{\partial w_1}

gradient = 0.03 * 0.05 = 0.0015Because weights in the earlier layers are changing at a much smaller amount, it affects the learning process of the neural network.

As we know the weights are updated by back-propagating gradients using the chain rule. As we increase the number of layers, the multiplication increases. This hampers the learning process.

So, how to solve the vanishing gradient problem, or at least alleviate it?

Here are some ways:

- Change how we initialize weights

- Using non-saturating activation functions – such as ReLU

- Batch normalization

- Gradient Clipping

4 – How Do You Know if Your Model Has Vanishing Gradients?

One last thing, once you know what the vanishing gradient problem is, and see how this problem occurs within the neural network. Here are some signs that you can look out for while training the neural model to identify if your model suffers from a vanishing gradient problem:

- If the loss decreases very slowly over time while training.

- If you observe erratic behavior or diverging training curves.

- If the gradients in the early layer of the network are becoming increasingly small while propagating backward.

Summary

- Vanishing gradient is a common issue encountered in training artificial neural networks, particularly in deep networks with many layers.

- The vanishing gradient affects the learning of neural networks, especially in earlier layers, as small gradients lead to slow or ineffective updates of weights during training.

- It arises when activation functions like sigmoid and tanh saturate, causing gradients to become vanishingly small, hindering weight updates.

- Neural network configurations using activation functions like sigmoid and tanh are more prone to experiencing the vanishing gradient problem, in deep neural networks.

Recommended Reading

- How to Detect Exploding Gradients in Neural Networks

- How to Fix the Vanishing Gradient Problem Using ReLU

Related Papers

Related Videos

- Vanishing and exploding gradients – Codebasics

- Vanishing & Exploding Gradients explained – Deep Lizard