Neural Network with Multiple Parameters – In machine learning, neural networks stand out as versatile and powerful models capable of solving a wide array of complex tasks. These networks, inspired by the structure of the brain, consist of interconnected nodes organized in layers. each contributing to the network’s ability to learn and make predictions from data.

While the concept of a neural network with a single parameter helps in understanding the training process intuitively, neural networks with multiple parameters are what we use in the real world.

In this tutorial, we will learn how to train a neural network with multiple parameters. From understanding the architecture to intuitively exploring essential steps to train the network.

Let’s begin.

Overview

In this tutorial, we will learn:

- Train a neural network with more than one parameter intuitively.

- Practical considerations while training a neural network.

1 – Train a Neural Network with Multiple Parameters

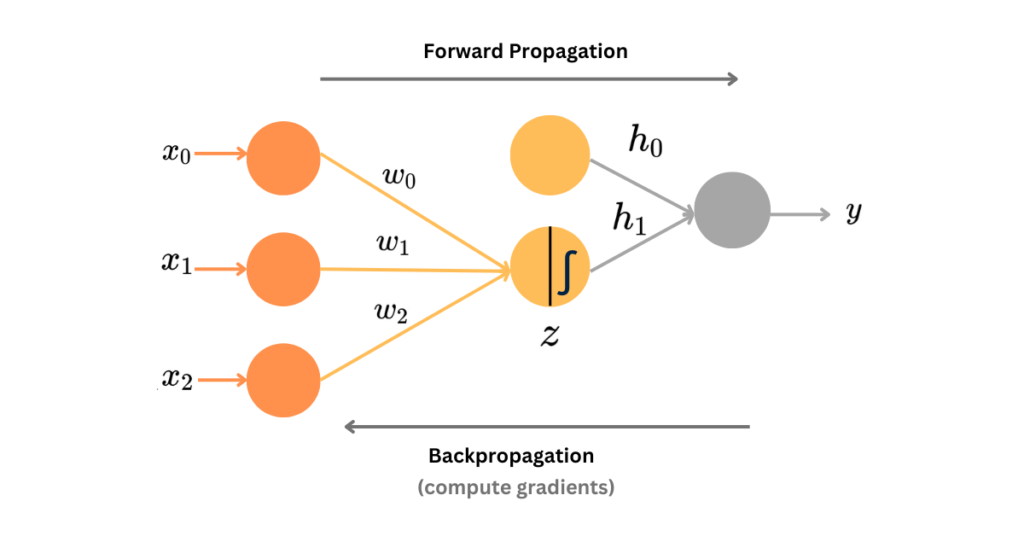

Let’s take an example of a simplified multi-layer network.

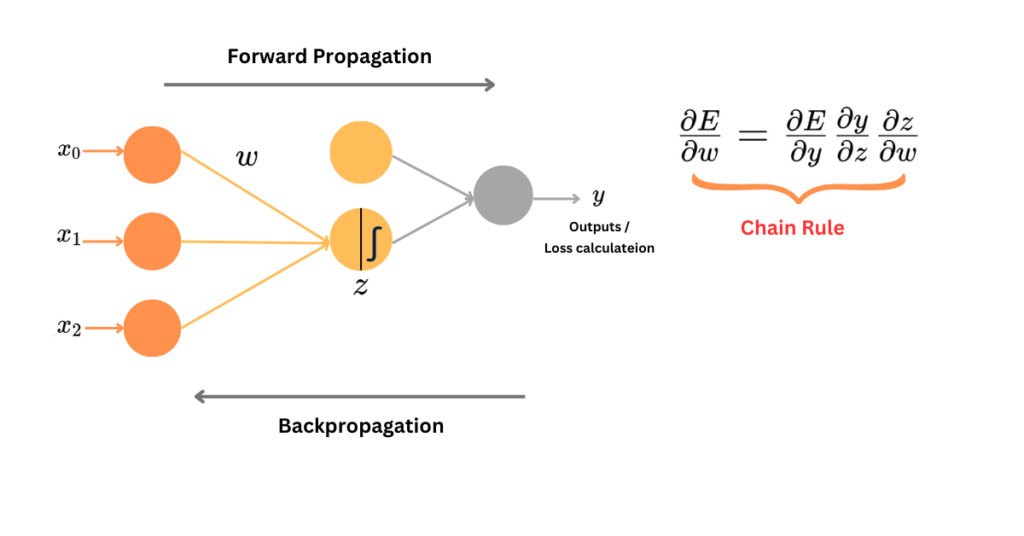

We can rewrite the derivate of the error w.r.t. weight as follows:

\frac{\partial E}{\partial w} = \frac{\partial E}{\partial y} \frac{\partial y}{\partial z} \frac{\partial z}{\partial w}

We call this the chain rule of calculus, and computing derivatives in this manner is known as backpropagation. Next, we backpropagate the error from the output layer through the earlier hidden layers since the error is calculated at the output layer.

Forward Propagation

We do this for all the weights in the network. First, initialize the weights, then compute the outputs given the data samples – forward propagation:

z=\sigma(x_0w_0+x_1w_1+x_2w_2)

y=h_0 + h_1z

Back Propagation

Then, compute the error and partial derivatives with respect to all these weights w_0, w_1, w_2 – backpropagation.

To apply the chain rule, we need to find the gradient for error E w.r.t. z and gradients of z w.r.t. w_0, w_1, w_2 .

i. Gradients of L w.r.t. y:

\frac{\partial E}{\partial y}

ii. Gradients of y w.r.t. z:

\frac{\partial y}{\partial z} = h_1

iii. Gradients of z w.r.t. w_0, w_1, w_2 :

\frac{\partial z}{\partial w_0} = x_0 \sigma'(x_0w_0 + x_1w_1 + x_2w_2)

\frac{\partial z}{\partial w_1} = x_1 \sigma'(x_0w_0 + x_1w_1 + x_2w_2)

\frac{\partial z}{\partial w_2} = x_2 \sigma'(x_0w_0 + x_1w_1 + x_2w_2)

Here, \sigma represents the activation functions that introduce non-linearity to the model. They determine whether to activate a neuron based on the weighted sum of its inputs.

iv. Gradients of E w.r.t. w_0, w_1, w_2 using the chain rule:

\frac{\partial E}{\partial w_0} = \frac{\partial L}{\partial y} \times \frac{\partial y}{\partial z} \times \frac{\partial z}{\partial w_0}

\frac{\partial E}{\partial w_1} = \frac{\partial L}{\partial y} \times \frac{\partial y}{\partial z} \times \frac{\partial z}{\partial w_1}

\frac{\partial E}{\partial w_2} = \frac{\partial L}{\partial y} \times \frac{\partial y}{\partial z} \times \frac{\partial z}{\partial w_2}

The partial derivatives are called the ‘gradient‘.

Each one of these partial derivatives measures how the loss function would change if we were to change a single variable.

Once we have the derivatives, we update the weights intuitively using:

new weights \leftarrow (old weights) + (learning rate)(gradient)

Now that we have covered the mathematical principles behind training a neural network with multiple parameters, here are some practice aspects of training to keep in mind.

2 – Practical Aspects of Training a Neural Network

Here are some practical aspects of training to keep in mind:

i. Data Preparation

The first step is to preprocess and prepare the data. This involves analyzing the dataset, normalizing, feature scaling, and then splitting the dataset into train, validation, and test sets.

ii. Model Initialization

Initialize weights and biases of the neural network. Proper initialization techniques, such as Xavier or He initialization, can accelerate and improve the process of training.

iii. Forward Propagation

Compute the predicted outputs of the neural network given the input data. This involves passing the input through each layer of the network and applying activation functions.

iv. Loss Calculation

Calculate the loss function, which measures the discrepancy between the predicted and the target (actual) output. Common loss functions for regression tasks include mean squared error (MSE), and for classification tasks, use Cross-entropy loss.

v. Backpropagation

Utilize backpropagation to compute the gradients of the loss function with respect to the model parameters. Updated the weights and biases of the network using gradient descent or its variants.

Summary

- Training a neural network with multiple parameters starts with an intuitive understanding of the architecture and keeps steps involved.

- The chain rule of calculus helps compute derivatives during backpropagation.

- Practical aspects like data preparation and model initialization are crucial for effective training.

Related Articles

- How to Train a Neural Network with One Parameter

- How to Choose the Best Activation Functions for Hidden Layers and Output Layers in Deep Learning

- Understanding Optimization Algorithms In Deep Learning

Further Reading

Neural Networks and Deep Learning – Coursera

Related Videos

- Deep Learning crash course – Leo Isikdogan